Speeding Up Edge Segment Based Moving Object Detection Using Background Subtraction in Video Surveillance System

Introduction

Moving object detection in a video is the process of identifying different object regions which are moving with respect to the background and in motion tracking method the movements of objects are constrained by environments[1].

detecting and tracking moving objects are widely used as low-level tasks of computer vision applications, such as video surveillance, In the case of motion detection without using any models, the most popular region-based approaches are background subtraction and optical flow. Background subtraction detects moving objects by subtracting estimated background models from images. This method is sensitive to illumination changes and small movement in the background, e.g. leaves of trees[2].The background subtraction consists in the subtraction of the current image from a reference one, and is commonly used mainly due to it simplicity and low computational cost[3]. The main reason of using background subtraction is that it works well if the background is static for long time.

After background subtraction successfully extract out the foreground, it reproduces them into a binary image. To make the objects more recognizable and informative, these objects should be marked and recorded[4]. As Real-time moving object detection is critical for a number of embedded applications such as security surveillance and visual tracking. Moving object detection often acts as an initial step for further processing such classification of the detected moving object. In order to perform more sophisticated operations such as classification, we need to first develop an efficient as well as accurate algorithm for moving object detection[5]. After that the objects of interest have been extracted from the static background, their edges are detected. Edge detection is the process of identifying sharp changes in the intensity of an image . One approach to detecting the edges of an image is to compute the magnitude of the gradient of an image and compare its value to a threshold. The gradient measures the rate of change of a multidimensional signal such as an image. The edges of an image are located at points where the magnitude of the rate of change in intensity is greater than a certain threshold value. The threshold determines how many edges will be detected in an image. blob analysis is performed to pinpoint the coordinates of the objects that have been identified by their edges. Blob analysis refers to the process of identifying points or regions of an image that differ from the surrounding area in properties[6].

A typical moving object detection algorithm has the following features: (a) estimation of the stationary part of the observed scene (background) and obtaining its statistical characteristics (b)obtaining difference images of frames taken at different times and difference images of the sequence with the image of the stationary part of the scene (c) discrimination of regions belonging to objects[7].

Morphology

Morphology is a broad set of image processing operations that process images based on shapes. Morphological operations apply a structuring element to an input image, creating an output image of the same size. In a morphological operation, the value of each pixel in the output image is based on a comparison of the corresponding pixel in the input image with its neighbors. By choosing the size and shape of the neighborhood, you can construct a morphological operation that is sensitive to specific shapes in the input image. The most basic morphological operations are dilation and erosion. Dilation adds pixels to the boundaries of objects in an image, while erosion removes pixels on object boundaries. The number of pixels added or removed from the objects in an image depends on the size and shape of the structuring element used to process the image. In the morphological dilation and erosion operations, the state of any given pixel in the output image is determined by applying a rule to the corresponding pixel and its neighbors in the input image. The rule used to process the pixels defines the operation as a dilation or an erosion[8].

In this proposal we will use the morphology close operation, The morphological close operation is a dilation followed by an erosion, using the same structuring element for both operations. This is to fill small gaps between regions of the object to avoid getting scattered not well formed object shape and to delete the small noisy regions.

Proposed moving object detection and tracking

Traditional edge segment based methods suffers from lot of false alarms, a truth comes from inaccurate accuracy of matching background edges in an image sequence caused by the variation of edges position and shape due to illumination change, background movement, camera movement and noise. Each edge fragment has different variation even for the same object of the same scene. so it is obvious that we should model every background edge segment individually and set an adequate threshold for each edge segment unlike traditional global common distance threshold based methods. We will depend on the statistics for each region for this mission, so edge segment based statistical background model can estimate background edge behavior by observing a number of reference frames and this can keep the statistics of motion variation, shape and segment size variation for every background edge segment. So the background edge matching accuracy can be improved using these statistics for setting edge specific automatic threshold.

Background modeling

Now to extract the statistics of each background edge segment across multiple consecutive frames, the proposed algorithm first extracts the edges of each frame in the training sequence using canny edge operator.

In order to estimate different edges behavior (especially edge position, shape and size variation). A Gaussian kernel approximated mask is placed on every edge pixel positions of the extracted edges and convolution is performed to create edge distribution map.

Now from a set of edge distribution maps , extracted from N frames (where N is the number of training frame sequence). The proposed algorithm superimposes them and adds them together to get binary background edge frequency accumulation map. This accumulation map contains useful information like edge motion statistics, edge shape variation statistics.

The next step is normalizing the background edge accumulation map Normalization gives the relative probability value for getting an edge pixel for that position in the distribution.

Thus, the distribution map generated this way is called statistical edge distribution map.

Static background edge list creation

Static background edge list represents the mean (peak) positions in each edge segment distribution, unique labels are assigned for the newly extracted edge segments. Thus every background edge segment can create edge labeling map. The labeled area represents the motion statistics of background edge through the training sequence. The area also indicates the search boundary for a candidate background edge segment.

Now for each edge segment, edge appearance relative mean probability is calculated by taking the average of all the edge pixel location values. The variance in distance of the probability distribution is calculated and considered as a matching threshold for its associated segment.

For more details on this background modeling see our previous work[9].

Moving object detection

Our proposed method aims to extract moving objects from an input image by utilizing their background model as depicted in figure(1). The proposed method consists of the following steps:(a)gradient map generation, (b)gradient difference image calculation, (c)a gradient threshold and binaries, (d)calculation of the resulted binary segments statistics, (e) comparing them with the background segment statistics to identify moving segments, (f)drawing colored bounding box around the moving regions, (g)comparing the statistics of the (d) step resulted in the current round with the statistics of next round to draw the same color bounding box around regions that are resemble.

Round N

Background model statistics

Input image

Frame no.1 of asequence

Generate gradient map statistics

Generate gradient map statistics

Calculate gradient difference map statistics

Binarizing the difference map by threshold

Found in the backgro-und

yes

Delete the segment from the frame

Calculate the statistics of the resulted frame edge segments

no

Identify regions and their centroids,areas and bounding boxes

Apply close morphology operator

Mark as moving segment

Save these features for the next round comparison

Resembl-e

no

Drawbound-ing box with new color

Regions centroid, are and bounding box color of round N+1

yes

Draw bounding box around the region with the same color as in round N

Figure(1): the overall process of the proposed system

First we compute the gradients of both, the input image and frame(1). This is because those gradients can offer structural information as well as edges direction which can be easily treated as the first step for isolation of the moving objects.

Figure(2) shows the input image and frame(1) gradient images of a video sequence.

Then we compute the difference between these two gradients using image subtraction and then binaries the results through using an appropriate threshold, this step is used to concentrate the matching process on specific regions instead of the whole frame . Figure(3) shows the gradient difference map and its binaries after threshold.

The next step is to test if the suspicious isolated regions resulted from the previous step are realy moving objects, under the truth assumption that we may have objects in the difference map resulted from waving trees or camera movement. So to ensure that we have really moving object or not we have to use our background model which is actually useful for handling such those situations.

The background edge segment matching in our proposed method utilizes background edge segment statistics. Thus, the candidate moving edge segment will be examined for a match using a wider edge distribution map, if corresponding background edges have high motion variation statistics. A narrow edge distribution map is applicable for those where background segment has less motion variation history. Thus the proposed method utilizes edge specific flexibility during matching background edge segment that drastically reduces false alarm rate. To match a candidate moving edge segment j. the system first takes the resulted threshold binaries image of fig3.(b), makes all the candidate moving regions pixel values equal “1” and then multiply this image by the edge labeling maps discussed in [9] to find the corresponding comparable regions in the background, under the consideration which implies that multiplying “1” by any other number will produce that number (corresponding background edge label).

Thus we can find the corresponding background regions instead of comparing the candidate moving segment with all the background edge segments which may be time consuming.

Now we have the following assumptions:

-if the multiplication results were all zeros, then the candidate moving edge segment has no correspond background segment, so it must be considered as moving edge segment.

-if any of the multiplication results have non zero values, then these values represent the corresponding background edge segments labels, such that the candidate moving edge segment will be compared against the statistics of these corresponding segments, fig(4) shows how to find correspondence.

|

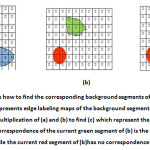

Figure(4): shows how to find the corresponding background segments of the current frame Segments, (a)represents edge labeling maps of the background segments, (b) current frame Segments, (c) multiplication of (a) and (b) to find (c) which represent the correspondence. Note that the correspondence of the current green segment of (b) is the background labeled (5) segment, while the current red segment of (b)has no correspondence in (a).

Click here to View Figure

|

For a given sample edge segment eji at frame i, to determine if it is a moving edge segment, we compute average eight neighborhood distance Dcm (eji) from all points of eji to the closest point of background segment in the static background edge list.

Dcm=1/nj

Here, eji is a moving edge segment if it satisfied

Dcm(eji)≥2.5*corresponding threshold, and eji is background edge segment otherwise.

Object tracking

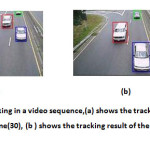

As we have mentioned before, for each moving region we have extracted three features which are centroid, area and bounding box, we will color each bounding box for each moving object resulted after the morphology close operation by a recognizable unique color at the scene. This can be done by saving the three features, namely the centroid, area and the color of the bounding box for next round iteration, that is the moving regions that appear in the next iteration will have the same bounding box color if it have the nearest centroid and area. Fig(5) shows the tracking results of two different intervals image frames .

|

Figure(5): object tracking in a video sequence,(a) shows the tracking result of three different objects at frame(30), (b ) shows the tracking result of the same three objects at frame(37).

Click here to View Figure

|

Conclusion

In this paper we proposed a new technique for moving objects detection & tracking this technique is based on the combination of two moving object techniques, we benefit from the privileges provided by these techniques, this is to integrate each other, reach good detection results and to reduce the total processing time which may reach to 50% if we depend just on the statistical technique. This done first by using the background subtraction technique using the gradient difference between the gradients of both background and input images, as a result the difference immediately produce the moving regions, such that we can concentrate on those regions instead of the whole image.

The turn then comes to the second technique which in turn used to check if these extracted regions are really moving objects and not any other waving things (such as waving tree branches), or in other case the gradient difference may be resulted as an effect of illumination variation, so the statistics of the background segments can solve such these false moving object detection situations.

References

- K. Kausalya and S. Chitrakala,” Idle Object Detection in Video for Banking ATM Applications”,Research Journal of Applied Sciences, Engineering andTechnology 4(24):5350-5356, 2012.

- Masayuki Yokoyama, Tomaso Poggio,”A Contour-Based Moving Object Detection and Tracking”,ICCV,2005.

- Duarte Duque, Henrique Santos and Paulo Cortez,”Moving Object Detection Unaffected by Cast Shadows, Highlights and Ghosts,IEEE,2005.

- L.Menaka, Dr. M.Kalaiselvi Geetha, M.Chitra,” REAL TIME OBJECT IDENTIFICATION FOR AUTOMATED VIDEO SURVEILLANCE SYSTEM”,International Conference on Information Systems and Computing (ICISC-2013), INDIA.

- Arnab Roy, Sanket Shinde and Kyoung-Don Kang,” An Approach for Efficient Real Time Moving Object Detection”,2009.

- Armando Nava and David Yoon,” Demo: Real-time Edge-Detection-Based Motion Tracking”,http://www.comm.utoronto.ca/~dkundur/course/real-time-digital-signal-processing/.

- Pranab Kumar Dhar, Mohammad Ibrahim Khan, Ashoke Kumar Sen Gupta, D.M.H. Hasan and Jong-Myon Kim,” An Efficient Real Time Moving Object Detection Method for Video Surveillance System”, International Journal of Signal Processing, Image Processing and Pattern Recognition Vol. 5, No. 3, September, 2012.

- matlab help.

- Jalal H. Awad and Amir S. Almallah,”background construction of video frames in video surveillance system using pixel frequency accumulation”, oriental journal of computer science & technology, ISSN:0974-6471,vol.7,No.(1),April 2014.

This work is licensed under a Creative Commons Attribution 4.0 International License.