1Sheo Das, 2Dr. Kuldeep Singh Raghuwanshi

1M.Tech Student, AIET Jaipur,

2Professor CSE Deptt., AIET Jaipur,

Article Publishing History

Article Received on :

Article Accepted on :

Article Published : 10 Jul 2014

Article Metrics

ABSTRACT:

A meta-search engine is a search engine that utilizes multiple search engines, when a meta search engine receives a query from a user, it invokes the underlying search engines to retrieve useful information for the user. Most of the data on the web is in the form of text or image. A good database selection algorithm should identify potentially useful databases accurately. Many approaches have been proposed to tackle the database selection problem. These approaches differ on the database representatives they use to indicate the contents of each database, the measures they use to indicate the usefulness of each database with respect to a given query, and the techniques they employ to estimate the usefulness. In this paper, we study to design an algorithm and compare with the existing algorithm to select the most appropriate search engine with respect to the user query.

KEYWORDS:

Past Queries; Search engine; Algorithms; Meta Search; Ranking.

Copy the following to cite this article:

Das S, Raghuwanshi K. S. Search Engine Selection Approach In Metasearch Using Past Queries. Orient. J. Comp. Sci. and Technol;7(1)

|

Copy the following to cite this URL:

Das S, Raghuwanshi K. S. Search Engine Selection Approach In Metasearch Using Past Queries. Orient. J. Comp. Sci. and Technol;7(1). Available from: http://computerscijournal.org/?p=773

|

INTRODUCTION

Search Engines are widely used for information retrieval, two types of search engines exist. General-purpose search engines aim at providing the capability to search all pages on the Web. Special-purpose search engines, on the other hand, focus on documents in confined domains such as documents in an organization or in a specific subject area [11]. But any single search engine cannot solve the problem of Internet information retrieval completely because the search engine has limit to search in their own databases.

IR is sub field of computer science concerned with presenting relevant information, collected from web information sources to users in response to search. Various types of IR tools have been created, solely to search information on web [3]. Apart from heavily used search engines (SEs) other useful tools are deep-web search portals, web directories and meta-search engines (MSEs) [3]. A person engaged in an information seeking process has one or more goals in mind and uses a search system as a tool to help achieve those goals.

Metasearch engines are being developed in response to the increasing availability of conventional search engines [13]. The major benefits of MSEs are their capabilities to combine the coverage of multiple search engines and to reach deep web. Search plans are constrained by the resources available: how much time should be allocated to the query and how much of the Internet’s resources should be consumed by it [13]. For each search engine selected by the database selector, the component document selector determines what documents to retrieve from the database of the search engine [11]. When user poses a query to the Metasearch through the user interface, the Metasearch engine is responsible to identify appropriate underlying search engine which has relevant document with respect to the user query [15]. Meta search engine selects the appropriate underlying search engine with respect to the user query. To enable search engine selection, some information that can represent the contents of the documents of each component search engine needs to be collected first. Such information for a search engine is called the representative of the search engine [1]. To find out the relevant information different similarity measure is used which estimate the relevance between document and user query [15].

The rest of the paper is organized as: In Section II MetaSearch engine(MSE), In Section III Related work , Section IV provides Proposed work, Section V discusses about Experimental result, Section VI discusses Result based comparison, Section VII presents Conclusion & Future scope.

METASEARCH ENGINE (MSE)

A Meta Search Engine overcomes by virtue of sending the user’s query to a set of search engines, collects the data from them displays only the relevant records[2]. In other words A metasearch engine is a system that provides unified access to multiple existing search engines [11]. Metasearch engine is generally composed of three parts that is Searching request for pre-processing part, Search interface agent part, Search results processing part [12]. There are a number of reasons for the development of a metasearch engine[11] such as.

- Increase the search coverage of the Web,

- Solve the scalability of searching the Web,

- Facilitate the invocation of multiple search engines

- Improve the retrieval effectiveness

A reference software component of a metasearch engine [11, 10] is given below.

Database selector

In many casesa large percentage of the local databases will be useless with respect to the query. Sending a query to the search engines of useless databases has several problems. if we send the query to useless databases also a large number of documents were returned from useless databases, more effort would be needed by the metasearch engine to identify useful documents. The problem of identifying potentially useful databases to search for a given query is known as the database selection problem. The software component database selector is responsible for identifying potentially useful databases for each user query. A good database selector should correctly identify as many potentially useful databases as possible while minimizing wrongly identifying useless databases as potentially useful ones.

Document selector

The component document selector determines what documents to retrieve from the database of the search engine. The goal is to retrieve as many potentially useful documents from the search engine as possible while minimizing the retrieval of useless documents.

Query dispatcher

The query dispatcher is responsible for establishing a connection with the server of each selected search engine and passing the query to it.

Result merger

After the results from selected component search engines are returned to the metasearch engine, the result merger combines the results into a single ranked list. A good result merger should rank all returned documents in descending order of their global similarities with the user query.

RELATED WORK

When a metasearch engine receives a query from a user, it invokes the database selector to select component search engines to send the query to. A good database selection algorithm should identify potentially useful databases accurately. Many approaches have been proposed to tackle the database selection problem [16]. In paper [15], utilizes the retrieved results of past queries for selecting the appropriate search engines for a specific user query. The selection of the search engines is based on the value of relevance between user query and the search engine.

ALIWEB [11] an often human-generated representative in a fixed format is used to represent the contents of each local database or a site. In WAIS [11] For a given query, the descriptions are used to rank component databases according to how similar they are to the query. In D-WISE [11], the representative of a component search engine consists of the document frequency of each term in the component database as well as the number of documents in the database. Therefore, the representative of a database with n distinct terms will contain n + 1 quantities (the n document frequencies and the cardinality of the database) in addition to the n terms. The Collection Retrieval Interface Network (CORI-Net) [11, 15, 6, 8] is carried out using two pieces of information for each distinct term i.e. document frequency and search engine frequency. In gGlOSS, the usefulness of a database is sensitive to the similarity threshold used. As a result, gGlOSS can differentiate a database with Estimating the Similarity of the Most Similar Document.( ESoMSD): is measure indicates the best that we can expect from a database as no other documents in the database can have higher similarities with the query.

Learning based approach [11] predict the usefulness of a database for new queries based on the retrieval experiences with the database from past queries. The retrieval experiences may be obtained in a number of ways. First, training queries can be used and the retrieval knowledge of each component database with respect to these training queries can be obtained in advance (i.e., before the database selector is enabled). The MRDD (Modeling Relevant Document Distribution) approach [18] is a static learning approach. During learning, a set of training queries is utilized. Each training query is submitted to every component database. From the returned documents from a database for a given query, all relevant documents are identified and a vector reflecting the distribution of the relevant documents is obtained and stored. Specifically, the vector has the format <r1, r2, : : : , rs>, where ri is a positive integer indicating that ri top ranked documents must be retrieved from the database in order to obtain i relevant documents for the query. Savvy Search (www.search.com) is a metasearch engine employing the dynamic learning approach. In SavvySearch [11, 13] the ranking score of a component search engine with respect to a query is computed based on the past retrieval experience of using the terms in the query. ProFusion approach [15, 11] is a hybrid learning approach, which combines both static and dynamic learning approach. In the ProFusion approach, when a user query is received by the metasearch engine, the query is first mapped to one or more categories. The query is mapped to a category that have at least one term that belong to the user query. In Relevant Document Distribution Estimation Method for Resource Selection (ReDDE) [21], estimate the distribution of relevant documents across the set of available search engines. For making its estimation ReDDE considers both search engine size and content similarity. ReDDE is the resource selection algorithm that normalizes the search engine size.

PROPOSED WORK

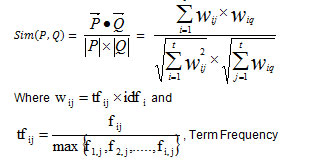

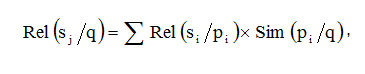

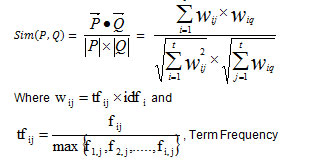

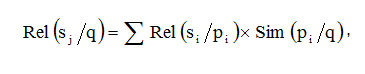

The propose algorithm is based on [15,16] in which the retrieved documents for each past query from all selected search engines and used to calculate the relevance between search engines and respective past query using top k document. The top k document is a merge list of documents from all search engines. Utilizes the retrieved results of past queries for selecting the appropriate search engines for a specific user query. Since there tends to be many similar queries [21] in a real world federated search system, the valuable information of past queries can help us provide better resource selection results.In this section, we propose a algorithm qSim, to utilize the valuable information to guide the decision of search engine selection. In the algorithm we estimate the value (Sj|pi) of for all past queries. For an online query , the task is to estimate rel (sj|q) based on the information of rel (Sj|pi) and sim (pi|qi). The proposed algorithm calculate similarity between past query and user query using cosine function that is based on vector space model. The query similarity algorithm [15] normalize the value of sim (pi|q) using a constant but if we consider the constant as a variable and change the value of variable, algorithm produce better result that is not addressed by[15], proposed algorithm focused on it. The search engines with higher value of rel (Sj|q) being selected by the Metasearch engine.

The steps of proposed algorithms can be described by assuming that there exists a set of past queries, which is denoted by P = {p1 , p2 …….pm } where pi represents the ith past query, and information resources for an user query S = { s1 , s2 …sn }. Now generate the ranked list for ith past query and calculate rel (sj | pj)and then assign scores for the information sources as [15]. For a user query q= {q1, q2, …qk} where qi represent terms in user query, calculate sim (pi | qi) as inspired by [12, 4]

(TF) is the weight of a term ti in document dj is the number of times that ti appears in document dj, denoted by fij.

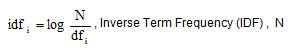

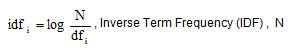

is the total number of documents in the system and dfi be the number of documents in which term ti appears at least once.

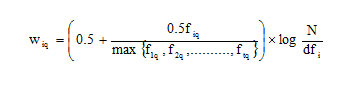

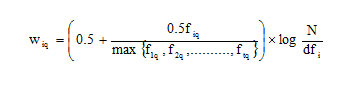

wiq is term weight of each term ti in q In next step we normalized the value of sim (pi|q) using [15], the step of calculating CutSimq if we consider (CutSimq= α*MaxSimq) where α is 0.8 in [15] then by changing the value of variable (α = 0.8, 0.7, 0.6, 0.5, 0.4, 0.3, 0.2, 0.1) we find better result , that is not addressed by [15], proposedalgorithm focused on it. Now for user query qand for (each search engine) compute Ranked the search engine according to the value of Re | (sj | q) . A larger value of is more likely means it contain most relevant documents with respect to the user query q.

EXPERIMENTAL RESULT

In order to testify the effectiveness of the propose algorithm is simulated in MATLAB 2010b. In this experiment we assume that there are six search engines, five training query and a user query, assume all past queries and user queries having maximum six terms.. The evaluation of result is as follows.

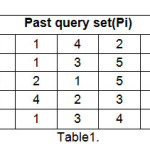

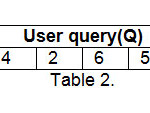

Let us assume the five past query set and a user query.

For every past query PQi, Metasearch engine selects the underlying search engine with respect to the training queries and all training query will apply to all selected search engines to retrieve document with respect to the user query. Finally a single merge list of documents return by search engine for all training query using [15] will be prepared. Similarly apply the user query(q) to all selected search engines by Metasearch and a single merge list of documents return by the six search engines for the user query using [15] will be prepared.

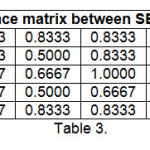

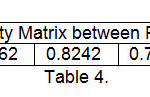

Computes rel (sj | pj) between a search engine and all past queries for top-6 documents on the basis of merge list of all past queries and user query.

Similarity between user query and all past queries can be calculated as in proposed algorithms using cosine similarity.

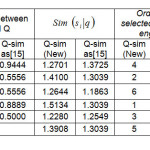

Now we normalize the values of sim (pj | q) by using the value of variable (α = 0.8, 0.7, 0.6, 0.5, 0.4, 0.3, 0.2, 0.1) and calculate the similaritybetween user query and all search engines as [14, 15].

By arranging Similarity between SE and q in descending order we get the order of selected search engine.

RESULT BASED COMPARISON

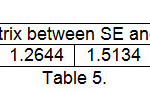

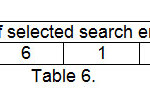

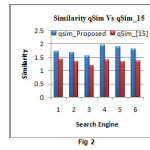

The simulation result of a q_Sim algorithm using rank merge list of documents and [15] algorithms is given in the table 7.

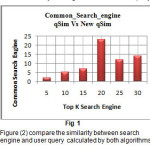

Figure (1) shows how many common search engine are addressed by both algorithms for the user query.

Figure (2) compare the similarity between search engine and user query calculated by both algorithms

CONCLUSION & FUTURE SCOPE

Resource selection is a valuable component for a search system. This paper proposes query similarity algorithm that find similarity between past query and user query using cosine similarity and implemented in matlab using pdist function, also tested the normalization by changing the value of variable. Similarity is calculated on merge lists of past queries and merge list of user query . Experimental results demonstrated the advantage of proposed qSim to achieve more effective similarity between search engine and user query in comparison to the existing query similarity algorithm[15]. If large numbers of search engines are selected for the user query the search engine selection problem would be compounded. So, with respect to the user query it is a challenging task to select the relevant search engine among the various search engines.

REFERENCES

- HOSSEIN JADIDOLESLAMY “Introduction to metasearch engines and result merging strategies: a survey” International Journal of Advances in Engineering & Technology, ISSN: 2231-1963, Nov (2011).

- K.SRINIVAS, P.V.S.SRINIVAS , A.GOVARDHAN “A Survey on the “Performance Evaluation of Various Meta Search Engines” IJCSI International Journal of Computer Science Issues, Vol. 8, Issue 3, No. 2, ISSN (Online): 1694-0814, May (2011).

- MANOJ M AND ELIZABETH JACOB “Information retrieval on Internet using meta-search engine: a review” Journal of Scientific & Industrial Research, Vol 67, pp. 739-746 October, (2008).

- BING LIU “Web DataMining” ACM Computing Classification,(1998).

- Daniela Rus, Robert Gray, and David Kotz “Autonomous and Adaptive Agents that Gather Information” Proceedings of International Workshop on Intelligent Adaptive Agents, WS-96-04, AAAI ’(1996)

- LUO SI and JAMIE CALLAN “A Semisupervised Learning Method to Merge Search Engine Results” ACM Transactions on Information Systems, Vol. 21, No. 4, Pages 457–491. October (2003).

- LING ZHENG, YANG BO, NING ZHANG “An Improved Link Selection Algorithm for Vertical Search Engine” The 1st International Conference on Information Science and Engineering, Crown Copyright IEEE, (2009).

- LUO SI AND JAMIE CALLAN “The Effect of Database Size Distribution on Resource Selection Algorithms” SIGIR 2003 Ws Distributed IR, LNCS 2924, pp. 31–42, 2003.© Springer-Verlag Berlin Heidelberg (2003).

- DJOERD HIEMSTRA “Information Retrieval Models” John Wiley and Sons, Ltd., ISBN-13: 978-0470027622, November (2009).

- H. JADIDOLESLAMY “ Search Result Merging and Ranking Strategies in Meta-Search Engines: A Survey” IJCSI International Journal of Computer Science Issues, ISSN (Online): 1694-0814, Vol. 9, Issue 4, No 3, July (2012).

- WEIYI MENG , CLEMENT YU , KING-LUP LIU “Building Efficient and Effective Metasearch Engines” ACM Computing Surveys, Vol. 34, No. 1, pp. 48–89, March (2002).

- XUE YUN, SHEN XIAOPING, CHEN JIANBIN “Research on an Algorithm of Metasearch Engine Based on Personalized Demand of Users” 2010 International Forum on Information Technology and Applications, IEEE, (2010).

- DANIEL DREILINGER, ADELE E. HOWE ” Experiences with Selecting Search Engines Using Metasearch” ACM Transactions on Information Systems, Vol. 15, No. 3, Pages 195–222, July (1997).

- SULEYMAN CETINTAS, LUO SI, HAO YUAN “Learning from Past Queries for Resource Selection” ACM CIKM’09, November 2–6, (2009).

- R.Kumar, A.K Giri “Learning Based Approach for Search Engine Selection in Metasearch” IJEMR Volume-3, Issue-5, ISSN No.: 2250-0758, Pages 82-88, October (2013).

- FILIPPO MENCZER, MELANIA DEGERATU, W. NICK STREET “Efficient and Scalable Pareto Optimization by Evolutionary Local Selection Algorithms” Massachusetts Institute of Technology , Evolutionary Computation 8(2): 223-247, (2000)

CrossRef

- AMIT SINGHAL, “Modern Information Retrieval: A Brief Overview” Bulletin of the IEEE Computer Society Technical Committee on Data Engineering, (2001).

- G.TOWELL, E.M. VOORHEES, N.K. GUPTA, B.J LAIRD “Learning Collection Fusion Strategies for Information Retrieval” Appears in Proceedings of the Twelfth AnnualMachine Learning Conference, Lake Tahoe, July (1995).

- YUAN FU-YONG, WANG JIN-DONG “An Implemented Rank Merging Algorithm for Meta Search Engine” International Conference on Research Challenges in Computer Science, IEEE, (2009).

- LI QIANG, CHEN QIN, WANG RONG-BO “Weighted-Position & Abstract Ranking Algorithm Based on User Profile for Meta-Search Engine” World Congress on Software Engineering, IEEE, (2009).

- LUO SI AND JAMIE CALLAN, “Relevant Document Distribution Estimation Method for Resource Selection” SIGIR ’03, July 28-Aug 1, 2003, Toronto, Canada. Copyright ACM, (2003).

This work is licensed under a Creative Commons Attribution 4.0 International License.