Background Construction of Video Frames in Video Surveillance System Using Pixel Frequency Accumulation

Jalal H. Awad and Amir S. Almallah

Department of Computer Science, Collage of Science, University of Mustansiriyah (UOM)

Article Publishing History

Article Received on :

Article Accepted on :

Article Published : 07 Jul 2014

Article Metrics

ABSTRACT:

Moving object detection has been widely used in diverse discipline such as intelligent transportation systems, airport security systems, video monitoring systems, and so on. In this paper we proposed an edge segment based statistical background modeling algorithm, which can be implemented for moving edge detection in video surveillance system using static camera. The proposed method is an edge segment based, so it can help to exceed some of the difficulties that face traditional pixel based methods in updating background model or bringing out ghosts while a sudden change occurs in the background.As an edge segment based method it is robust to illumination variation and noise, it is also robust against the traditional difficulties that faces existing pixel based methods like the scattering of the moving edge pixels. Therefore they can’t utilize edge shape information. Some other edge segment based methods treat every edge segment equally creating edge mismatch due to non stationary background. The proposed method found elegant solution to this lake by using a model that uses the statistics of each background edge segment, so that it can model both the static and partially moving background edges using ordinary training images that may even contain moving objects.

KEYWORDS:

Background modeling, Statistical distribution map, Moving edge segment, Edge segment matching.

Copy the following to cite this article:

Awad J. H, Almallah A. S. Background Construction of Video Frames in Video Surveillance System Using Pixel Frequency Accumulation. Orient. J. Comp. Sci. and Technol;7(1)

|

Copy the following to cite this URL:

Awad J. H, Almallah A. S. Background Construction of Video Frames in Video Surveillance System Using Pixel Frequency Accumulation. Orient. J. Comp. Sci. and Technol;7(1). Available from: http://computerscijournal.org/?p=710

|

Introduction

Moving object detection in real time is a challenging task in visual surveillance systems. It often acts as an initial step for further processing such as classification of the detected moving object. In order to perform more sophisticated operations such as classification[1]. Visual surveillance is one of the major research topics and has two major steps: detection and recognition of moving objects. Detection speed and accuracy are of major importance. In other words, moving objects must be detected as quickly and accurately as possible. Therefore, changes like environmental light and undesired movements like waving trees must have the least impact on the accuracy rate[2]. ideo surveillance is used widely in many fields, such as traffic monitoring, human activity and behavior understanding and so on. In active video Surveillance system, due to objects moving randomly, scene complexity, and camera motion. it is very hard to detect and track moving objects in sequence image frames. Detection and tracking of moving objects are important tasks and challenging problem in computer vision especially for visual-based surveillance systems[3]. background subtraction method is a very common used technology in moving object segmentation[4,5,6]. It attempts to detect moving regions by subtracting the current image pixel-by-pixel from a reference background image that is created by averaging images over time in an initialization period. The pixels where the difference is above a threshold are classified as foreground. Optical flow methods are other methods make use of the flow vectors of moving objects over time to detect moving regions in an image[5,7]. They can detect motion in video sequences even from a moving camera, however, most of the optical flow methods are computationally complex and cannot be used real-time without specialized hardware. Temporal differencing method attempts to detect moving regions by making use of the pixel-by-pixel difference of consecutive frames (two or three) in a video sequence. This method is highly adaptive to dynamic scene changes, however, it generally fails in detecting whole relevant pixels of some types of moving objects. More advanced methods that make use of the statistical characteristics of individual pixels have been developed to overcome the shortcomings of basic background subtraction methods. These statistical methods are mainly inspired by the background subtraction methods in terms of keeping and dynamically updating statistics of the pixels that belong to the background image process. Foreground pixels are identified by comparing each pixel’s statistics with that of the background model. This approach is becoming more popular due to its reliability in scenes that contain noise, illumination changes and shadow[5].

The proposed algorithm

Traditional edge segment based methods suffers from lot of false alarms, a truth comes from inaccurate accuracy of matching background edgesin an image sequence caused by the variation of edges position and shape due to illumination change, background movement, camera movement and noise. Each edge fragment has different variation even for the same object of the same scene. Figure(1) illustrates the characteristics of background edges for consecutive frames, figures 1(a) and 1(b) show two consecutive background edge images. Figure 1(c) shows the edge difference image of those two images. There are two regions shown in figure 1(c), the region number ‘1’ contains the difference between the edges of the same object (tree) in the two consecutive frames (a and b), the difference caused by the movement of tree due to wind, while region number ‘2’ contains the difference between the edges of the same object (windows) in the two consecutive frames (a and b), the difference here is for another reason (i.e illumination variation). As compared with other regions (i.e. walls, street) the tree and windows have high variation, so it is obvious from this figure that we should model every background edge segment individually and set an adequate threshold for each edge segment unlike traditional global common distance threshold based methods. We will depend on the statistics for each region for this mission, so edge segment based statistical background model can estimate background edge behavior by observing a number of reference frames and this can keep the statistics of motion variation, shape and segment size variation for every background edge segment. So the background edge matching accuracy can be improved using these statistics for setting edge specific automatic threshold.

|

Figure1: characteristics of background edge segments in consecutive frames: (a)-(b)Edge segments from two consecutive frames, and (c) difference between (a) and (b)

Click here to View Figure

|

Now to extract the statistics of each background edge segment across multiple consecutive frames, the proposed algorithm first extracts the edges of each frame in the training sequence using canny edge operator. Let eji represents the edges extracted from frame i, then the overall extracted edges from frame i will form a binary edge map (BEMi).

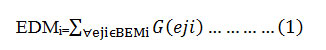

In order to estimate different edges behavior (especially edge position, shape and size variation). A Gaussian kernel approximated mask G(.) as shown in figure 2(a) is placed on every edge pixel positions over BEMi and convolution is performed to create edge distribution map of fram i (EDMi). the Gaussian kernel approximated mask is intended to estimate edge previous mentioned variations. So we can tune its dimensions according to the edge movement and other variation tolerance.

|

Fig2: Gaussian convolution over a sample edge segment: (a) a GaussianKernel approximated mask, (b) edge segment extracted using canny operator,(c) convolution outcome using the mask in (a) and every edge pixel positionOf the edge segment in (b). (d) normalized relative probability values for get-ting an edge pixel for each pixel location in the distribution.

Click here to View Figure

|

Figure 2(b) shows an edge segment extracted using canny edge operator, figure 2(c) shows the convolution outcome of the Gaussian mask over this edge segment (the mask is 3×3). Note that the thin edge in figure 2(b) becomes wider in figure 2(c), this is to reflect the edge segment behavior as it can have flexible wide matching area.

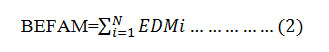

Now from a set of edge distribution maps (EDM), extracted from N frames (where N is the number of training frame sequence). The proposed algorithm superimpose them and add them together to get binary background edge frequency accumulation map(BEFAM).

This accumulation map (BEFAM) contains useful information like edge motion statistics, edge shape variation statistics, as well as the influence from moving objects that were present at the sequence during the accumulation period. So in order to remove the edge contribution for all the moving objects that pass through this sequence, the proposed algorithm utilizes the frequencies of pixels results from the successive accumulation as in equation (2). The proposed algorithm depends on the truth which implies that static things pixels in the same scene have higher accumulation frequencies than moving objects pixels in which edge pixels will be in different locations at each successive frame, so empirically we found that for N=150 frames, we should remove pixels with frequency ≤12. Background edge pixels create higher accumulation frequencies as shown in figure (3), the higher peaks correspond to the contribution of edges fromthe background where very small peaks are from the influence of moving objects edges.

|

Fig3: edge pixels accumulation frequencies resulted from the accumulation of 150 training frames: the color bar at the right shows the pixels with red color have Higher frequencies.

Click here to View Figure

|

The next step is normalizing the background edge accumulation map, figure 2(d) illustrates normalized background edge frequency accumulation map, that is made by dividing every pixel position value of the distribution in figure 2(c) with the highest value found in the distribution. Normalization gives the relative probability value for getting an edge pixel for that position in the distribution. Now to measure the probable edge pixel movement (for example moving tree branch) from its origin position, there is a need to calculate the eight neighborhood distance D8 values from their origin (mean position). These distances can be easily calculated by taking each individual accumulated edge segment extracted using canny operator and superimpose it over the normalized background edge frequency accumulation map, considering it as the edge mean position of the corresponding normalized edge frequency accumulation map, as the origin position and as the base from which to measure the distances to the other location in the map.

Thus, the distribution map generated this way after threshold and normalizing BEFAM is called statistical edge distribution map (SEDM). Figure (4) shows an example of SEDM created from a sample sequence.

|

Fig4: creation of SEDM and SBE from sequence of N frames: (a) a sample frame from the sequence, (b) accumulation map BEFAM of the sequence in (a),SBE obtained by threshold and extracting thin edges of (b).

Click here to View Figure

|

Static background edge list creation

Static background edge (SBE) list represents the mean (peak) positions in each edge segment distribution as shown in figure 4(c). this list can be obtained by considering each region resulted from the accumulation of the corresponding edge segment (of the same position) in the consecutive frames as static background edge segment.

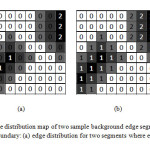

Unique labels are assigned for the newly extracted edge segments. Edge segment labeling maps for all SBE segments are computed using SEDM. Figure (5) illustrates two edge distribution maps along with corresponding thinned (mean) edge segments in dark color with their labels shown in its distribution.

|

Fig5: Edge distribution map of two sample background edge segments withTheir search boundary (a) edge distribution for two segments where edge positions Are labeled with segment identifier and (b) edge labeling map for the two edge se-gments for their associated distribution in (a).

Click here to View Figure

|

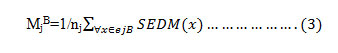

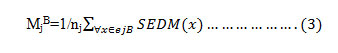

Thus every background edge segment j can create edge labeling map. The labeled area represents the motion statistics of background edge through the training sequence. The area also indicates the search boundary for a candidate background edge segment. Now for each edge segment ejB on the SBE, edge appearance relative mean probability MjB is calculated by taking the average of all the edge pixel location (x) values from ejB over SEDM.

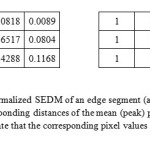

Here nj is the number of edge points on the edge segment ejB. figure (6)shows a threshold and normalized SEDM of an edge segment (a) and its corresponding distances from its mean positions (b), where the edge mean positions have zero distances, so the MjB for this edge segment on the edge mean position is (0.82585). thus the distribution has relative probability of having background segment at its mean position by (0.82585).

|

Fig6: Threshold and normalized SEDM of an edge segment (a), while (b) is the corresponding distances of the mean (peak) pixels of (a), zero ent- ries in (b) indicate that the corresponding pixel values in (a) are the peaks of this segment.

Click here to View Figure

|

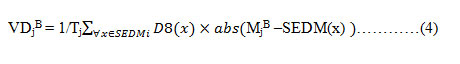

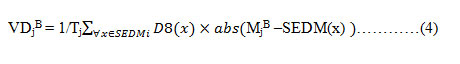

The variance in distance (VDjB) of the probability distribution is calculated by:

Here, Tj is the total number of points in the labeled region SEDMi, D8(x) is the eight connectivity distance from nearest edge mean location in SBE to the point x, and SEDM(x) is the normalized accumulation score, i.e., the relative probability value of position x. using equation (4), VDjB = (0.5485) is found for the distribution in figure (6). Now for all ejB segments in SBE list, corresponding VDjB are stored as distance threshold for its associated background edge segment.

The overall operation is illustrated in the flowchart of figure (7).

Conclusions

Edge segment based statistical background model is more suitable than pixel based models for real time video surveillance applications, because it treats every edge segment as a whole and utilize its shape information, its immunity against illumination variation and the automatic threshold and treatment of each edge segment individually, the situation that can’t be offered by the pixel based methods which can be time consuming and can be affected by illumination variation or even the traditional edge pixel based methods which produce scattering edge pixels. By utilizing statistics and the movement approximation kernel, the proposed approach offers flexibility factor for the separation of true background edge segments from moving edge segments. Moreover the background initialization step does not require motion free training image sequence.

References

- Pranab Kumar Dhar, Mohammad Ibrahim Khan, Ashoke Kumar Sen Gupta, D.M.H. Hasanand Jong-Myon Kim,” An Efficient Real Time Moving Object Detection Method for Video Surveillance System”, International Journal of Signal Processing, Image Processing and Pattern Recognition Vol. 5, No. 3, September, 2012

- AliBazmi, Karim Faez, “Increasing the Accuracy of Detection and Recognition in Visual Surveillance”, International Journal of Electrical and Computer Engineering (IJECE) Vol.2, No.3, June 2012, pp. 395~404 ISSN: 2088-8708

- CHI Jian-nan ZHANG Chuang ZHANG Han LIU Yang YAN Yan-tao,”Approach of Moving Objects Detection in Active Video Surveillance”, Joint 48th IEEE Conference on Decision and Control and28th Chinese Control ConferenceShanghai, P.R. China, December 16-18, 2009

- Yuyong CUI 1, , Zhiyuan ZENG, Weihong CUI , Bitao FU, “Moving Object Detection Based Frame Difference andGraph Cuts ”, Journal of Computational Information Systems 8: 1 (2012) 21–29Available at http://www.Jofcis.com

- Yi˘githan Dedeo˘glu, “MOVING OBJECT DETECTION,TRACKING AND LASSIFICATION FORSMART VIDEO SURVEILLANCE”, a thesissubmitted to the department of computer engineeringand the institute of engineering and scienceof bilkent universityin partial fulfillment of the requirementsfor the degree ofmaster of science. August, 2004

- Kaiqi Huanga,, LiangshengWanga, Tieniu Tana, Steve Maybank, “Areal-time object detecting and tracking system for outdoor nightsurveillance”, the journal of the pattern recognition society, Received 27 April 2006; received in revised form 31 March 2007; accepted 23 May 2007

- Hyungkwan Son, Suyoung Chi, “Moving User Segmentation for Sports Simulator Using Frame Difference and Edge Detection”, International Journal of Signal Processing Systems Vol. 1, No. 1 June 2013

This work is licensed under a Creative Commons Attribution 4.0 International License.