Introduction

The increased availability and usage of online digital video has created a need for automatic video content analysis techniques. Detection of shot boundaries is an early and quite important processing step in video analysis, especially for indexing and effective retrieval applications [1]. Video shot boundary detection algorithms have to challenge the difficulty of finding shot boundaries in the presence of camera and object motion and illumination variations. Moreover, different video shot boundaries may present very different appearances like abrupt temporal changes or smooth temporal transitions. Shot boundary detection has been an area of active research. Many automatic techniques have been developed to detect frame transitions in video sequences [2].

Shot Boundary Detection

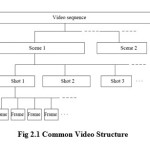

A video can be broken down in scene, shot and frames. A shot is a sequence of frames captured by a single camera in a single continuous action. A shot boundary is the transition between two shots. A scene is a logical grouping of shots into a semantic unit [1].

Shot transition types

Shot transition is of 2 types.

- Abrupt.

Abrupt transition occurs in a single frame.

- Gradual.

It is again classified into 3 types. They are

- Dissolve

- Fade

- Wipe

A dissolve is a gradual transition from one scene to another in which the first scene fade – out and the second scene fade – in. so it is a combination of fade – in and fade – out.

A fade – out is a slow decrease in brightness resulting in a black frame.

A wipe is a gradual transition in which a line moves across the scene, with the new scene appearing behind the line [1].

Features used for representation of video frame

Almost all shot change detection algorithms reduce the large dimensionality of the video domain by extracting a small number of features from one or more regions of interest in each video frame. Such features include the following:

1. Luminance/color

The simplest feature that can be used to characterize an image is its average grayscale luminance. This, however, is susceptible to changes in illumination. A more robust choice is to use one or more statistics (e.g., averages) of the values in a suitable color space, like hue saturation value (HSV).

2. Luminance/color histogram

A richer feature for an image is the grayscale or color histogram. Its advantage is that it is discriminant, easy to compute, and mostly insensitive to translational, rotational, and zooming camera motions. For these reasons, it is widely used. However, it does not represent the spatial distribution of color in an image.

3. Image edges

Another choice for characterizing an image is its edge information. The advantage of this feature is that it is sufficiently invariant to illumination changes and several types of motion, and is related to the human visual perception of a scene. Its main disadvantage is computational cost, noise sensitivity, and when not post-processed, high dimensionality.

4. Features in transform domain

The information present in the pixels of an image can also be represented by using transformations such as discrete Fourier transform, discrete cosine transform and wavelets. Such transformations also lead to representations in lower dimensions. Disadvantages include high computational cost, effects of blocking while computing the transform domain coefficients, and loss of information caused by retaining only a few coefficients.

5. Motion

This is sometimes used as a feature for detecting shot transitions, but it is usually coupled with other features, since motion itself can be highly discontinuous within a shot (when motion changes abruptly) and is not useful when there is no motion in the video [4].

5. Spatial domain for feature extraction

The size of the region from which individual features are extracted plays an important role in the overall performance of algorithms shot change detection. A small region tends to reduce detection invariance with respect to motion, while a large region might lead to missed transitions between similar shots. In the following, we will describe various possible choices:

1. Single pixel

Some algorithms derive a feature for each pixel such as luminance and edge strength. However, such an approach results in a feature vector of very large dimension, and is very sensitive to motion, unless motion compensation is subsequently performed.

2. Rectangular block:

Another method is to segment each frame into equal-sized blocks and extract a set of features (e.g., average color or orientation, color histogram) from these blocks. This approach has the advantage of being invariant to small motion of camera and object, as well as being adequately discriminant for shot boundary detection.

3. Arbitrarily shaped region

Feature extraction can also be applied to arbitrarily shaped and sized regions in a frame, derived by spatial segmentation algorithms. This enables the derivation of features based on the most homogeneous regions, thus facilitating a better detection of temporal discontinuities. The main disadvantage is the high computational complexity and instability of region segmentation.

4. Whole frame

The algorithms that extract features (e.g., histograms) from the whole frame have the advantage of being robust with respect to motion within a shot, but tend to have poor performance at detecting the change between two similar shots [4].

6. Similarity Measure

To evaluate discontinuity between frames based on the selected features, an appropriate similarity /dissimilarity metric needs to be chosen. A wide variety of dissimilarity measures has been used in the literature. Some of the commonly used measures are Euclidean distance, cosine dissimilarity, Mahalanobis distance and log-likelihood ratio. Another example of commonly used metric, especially in the case of histograms, is the chi-square metric. Information theoretic measures like mutual information and joint entropy between consecutive frames are also proposed for detecting cuts and fades [4].

7. Temporal domain of continuity metric

Another important aspect of shot boundary detection algorithms is the temporal window that is used to perform shot change detection. In general, the objective is to select a temporal window that contains a representative amount of video activity. The following cases are typically used:

1. Two frames

The simplest way to detect discontinuity between frames is to look for a high value of the discontinuity metric between two successive frames. However, such an approach can fail to discriminate between shot transitions and changes within the shot when there is significant variation in activity among different parts of the video or when certain shots contain events that cause brief discontinuities (e.g., photographic flashes). It also has difficulty in detecting gradual transitions.

2. N-frame window

One technique for alleviating the above problems is to detect the discontinuity by using the features of all frames within a suitable temporal window, which is centered on the location of the potential discontinuity.

3. Interval since last shot change

Another method for detecting a shot boundary is to compute one or more statistics from the last detected shot change up to the current point, and to check if the next frame is consistent with them. The problem with such approaches is that there is often great variability within shots, such that statistics computed for an entire shot may not be representative of its end [4].

8. Shot change detection method

1. Static thresholding

This is the most basic decision method, which entails comparing a metric expressing the similarity or dissimilarity of the features computed on adjacent frames against a fixed threshold. This only performs well if video content exhibits similar characteristics over time, and only if the threshold is manually adjusted for each video.

2. Adaptive Thresholding

The obvious solution to the problems of the static thresholding is to vary the threshold depending on a statistic (e.g. average) of the feature difference metrics within a temporal window.

3. Probabilistic Detection

Perhaps the most rigorous way to detect shot changes is to model the pattern of specific types of shot transitions and, presupposing specific probability distributions for the feature difference metrics in each shot, perform optimal a posteriori shot change estimation.

4. Trained Classifier

A radically different methodfor detecting shot changes is to formulate theproblem as a classification task where frames areclassified (through their corresponding features)into two classes, namely “shot change” and “noshot change”, and train a classifier (e.g. a neuralnetwork) to distinguish between the two classes [5].

9. Shot Boundary detection techniques

9.1 Pixel based shot boundary detection

It is the simplest method for determining shot boundaries. The difference between corresponding pixels of two consecutive frames is computed. If the difference is greater than some threshold, then shot boundary is assumed [6].

9.2 Histogram based shot boundary detection

Histograms are the most common method used to detect shot boundaries. The simplest histogram method computes gray level or color histograms of the two images. If the difference between the two histograms is above threshold, a shot boundary is assumed.

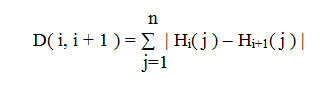

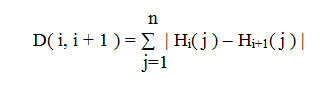

Formula1

The above equation used for histogram based shot boundary detection. Where j indicates the gray level value. Hi( j ) is the histogram for the gray level j in the frame i and n is the total number of gray levels. This method is less sensitive to object and camera motion. This method detects hard – cut, fade and dissolve and fails when there is large amount of motion [3].

9.3 Block based shot boundary detection

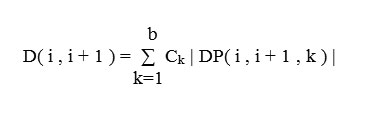

In this technique each frame is divided in fixed number of blocks and difference between blocks at consecutive position in frame i and i+1 has been used to find out difference between frames. If this frame difference is greater than particular threshold value then break is detected. The formula is used for block based shot boundary detection.

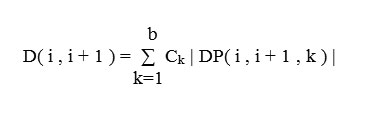

Formula2

Where each frame is divided into b blocks and DP( i , i+1 , k) indicated difference of kth block between ith and (i+1)th frame and Ck is predetermined coefficient for block k. The absolute difference between all the blocks of two consecutive frames is added to find out the difference between the two frames. This method is relatively slow due to complexity of formulas. This method can’t identify dissolve, fade or fast moving objects. But computationally better than pixel based shot detection [3].

9.4 Shot boundary detection using motion activity descriptor

The basis of any video segmentation method consists in detecting visual discontinuities along the time domain. During this process, it is required to extract visual features that measure the degree of similarity between frames in a given shot. This measure is related to the difference or discontinuity between frame n and n+j where j>= 1 [7].

Conclusion

The different techniques are discussed to detect a shot boundary depending upon the contents and the change in contents of video. As the key frames needs to be processed for annotation purpose, the important information must not be missed.

References

- http://mmlab.disi.unitn.it/wiki/index.php?title=Shot_Boundary_Detection_Techniques&oldid =39128

- Jordi Mas and Gabriel Fernandez, “Video Shot Boundary Detection based on Color Histogram”, Digital Television Center (CeTVD), La Salle School of Engineering, Ramon Llull University, Barcelona, Spain.

- Swati D. Bendale, Bijal.J.Talati, “Analysis of Popular Video Shot Boundary Detection Techniques in Uncompressed Domain”, International Journal of Computer Applications (0975 – 8887) Volume 60– No.3, December 2012.

- C. Krishna Mohan, “Features for Video Shot Boundary Detection and Classification”, Department of Computer Science and Engineering, Indian Institute of Technology Madras December 2007.

- Costas Cotsaces, Student Member, IEEE, Nikos Nikolaidis, Member, IEEE, and Ioannis Pitas, Senior Member, IEEE, “Video Shot Boundary Detection and Condensed Representation: A Review”.

- Purnima.S.Mittalkod, Dr. G.N Srinivasan, “Shot Boundary Detection Algorithms and Techniques: A Review”, Research Journal of Computer Systems Engineering – An International Journal, Vol – 2, Issue 02, June – 2011.

- Abdelati Malek Amel, Ben Abdelali Abdessalem and Mtibaa Abdellatif, “Video shot boundary detection using motion activity descriptor”, journal of telecommunications, volume 2, issue 1, April 2010.

This work is licensed under a Creative Commons Attribution 4.0 International License.