Anil S Naik

Department of Information Technology, Walchand Institute of Technology, Solapur, India.

Corresponding Author’s Email: anil.nk287@gmail.com

DOI : http://dx.doi.org/10.13005/ojcst12.04.05

Article Publishing History

Article Received on : 21 Jan 2020

Article Accepted on : 13 Feb 2020

Article Published : 13 Feb 2020

Article Metrics

ABSTRACT:

An Emotion monitoring system for a call-center is proposed. It aims to simplify the tracking and management of emotions extracted from call center Employee-Customer conversations. The system is composed of four modules: Emotion Detection, Emotion Analysis and Report Generation, Database Manager, and User Interface. The Emotion Detection module uses Tone Analyzer to extract them for reliable emotion; it also performs the Utterance Analysis for detecting emotion. The 14 emotions detected by the tone analyzer are happy, joy, anger, sad and neutral, etc. The Emotion Analysis module performs classification into the 3 categories: Neutral, Anger and Joy. By using this category, it applies the point-scoring technique for calculating the Employee Score. This module also polishes the output of the Emotion Detection module to provide a more presentable output of a sequence of emotions of the Employee and the Customer. The Database Manager is responsible for the management of the database wherein it handles the creation, and update of data. The Interface module serves as the view and user interface for the whole system. The system is comprised of an Android application for conversation and a web application to view reports. The Android application was developed using Android Studio to maintain the modularity and flexibility of the system. The local server monitors the conversation, it displays the detected emotions of both the Customer and the Employee. On the other hand, the web application was constructed using the Django Framework to maintain its modularity and abstraction by using a model. It provides reports and analysis of the emotions expressed by the customer during conversations. Using the Model View Template (MVT) approach, the Emotion monitoring system is scalable, reusable and modular.

KEYWORDS:

The Emotion Analysis; Model View Template (MVT); Django Framework; Android Application

Copy the following to cite this article:

Naik A. S. Text and Voice Based Emotion Monitoring System. Orient. J. Comp. Sci. and Technol; 12(4).

|

Copy the following to cite this URL:

Naik A. S. Text and Voice Based Emotion Monitoring System. Orient. J. Comp. Sci. and Technol; 12(4). Available from: https://bit.ly/2Sl710G

|

Introduction

Emotion plays a significant role in successfully communicating one’s

intentions and beliefs. Therefore, emotion recognition has recently become the

focus of several studies. Some of its applications include computer games,

talking toys and call center satisfaction monitoring. In a call centre

environment, emotion analytics is important since ineffective handling of

conversations by the agents can often lead to customer dissatisfaction and even

loss of business. In view of this, we propose an Emotion Monitoring system that

performs a prosodic analysis of call center agent and client conversations.

To construct this kind of system, it is necessary to know some basic

points that can help us in building a robust emotion monitoring system. There

are various types of emotions such as surprise, anger, happiness, fear,

boredom, and sadness and sometimes their no specific emotion related to it. It

is necessary to choose only three emotions that are significant in a call

center environment: Neutral, Joy, and Anger. With these emotions, it is

desirable to look for specific features that can easily distinguish these three

emotions.

Literature survey research reveals that prosodic features are enough

for effective classification of emotions. These features include pitch, energy

and audible/inaudible contours, rhythm, melody, flatness and the like. But

among these features, it is observed that the fundamental frequency of an

utterance is very useful in detecting emotions. There is a very high

inconsistency in recognizing emotions between anger and happiness, two of the

main important emotions in the environment. Often, these emotions are

classified or labeled interchangeably. To extract these features from the

utterance, feature selection methods such as forward selection, and backward

elimination are frequently employed. In, anger, boredom, happiness or

satisfaction, and neutral were the four emotions detected by their systems.

Multivariant discriminant analysis was used and detected that the Median

Derivative of Pitch obtained the highest percentage accuracy.

In implementing the emotion detection system, the Tone analyzer is

used for detecting the emotion. Detected Emotions are classified into three

categories. Using this information, building a system that can detect the

emotions of a call center agent and client during their conversation is

feasible. It is also feasible to build a web application that provides a report

of the performance of each call center agent to their supervisor.1

The development of an emotion monitoring system for call center

agents is significant because both call center agents and supervisors can

benefit from feedback reported by the system. The system described in this

paper can detect and display the emotions of the call center agent as well as

the caller’s emotions is detected at the end of the conversation. This will

help the Employee manage his emotions and handle the conversation properly. The

detected emotions are anger. neutral and joy. For the supervisor/manager in a

call center, the system will also provide the statistics of emotions of call

center conversations for monitoring the Employee’s emotional performance.

Classification of Emotions and Its Process

In the diagram, we see that Dataset is taken as input which is the

conversation between the Customer and the Employee is considered as the call

which is performed using the two Android apps which are connected to Server.

The server is monitoring the Conversation and storing it for Emotion Detection

purpose. In Emotion Detection, emotion is detected for each statement of the conversation.

This Emotion Detection phase is an important part of the project. After Emotion

Detection, the classification of Emotion is done into the 3 categories. Three

Categories are Neutral, Joy, and Anger. This classification is based category

of Emotion. After the classification the next important phase is Analysis. In

which we are using the scoring point technique to calculate the Employee Score

for the Conversation. And it also monitors the last 60% conversation of the

customer for checking Customer satisfaction. When all analysis part is

completed it stores the conversation with the emotion of each statement into

the database. This stored data is used by the manager to check the Employee

performance for conversations performed in a call center. This is checked by

the manager using the Website where the graphical representation is used for

easier understanding.3

Existing System

Emotion plays a significant role in successfully communicating one’s

intentions and beliefs. As a consequence, emotion recognition has recently

become the focus of several studies. Some of its applications include computer

games, talking toys and call center satisfaction monitoring.

In a call center environment,

emotion analytics is important since ineffective handling of conversations by

the employees can often lead to customer dissatisfaction and even loss of

business. In view of this, we propose a real-time emotion recognition system

that performs a prosodic analysis of call center agent and client

conversations.

Previous research reveals that prosodic features are enough for

effective classification of emotions. These features include pitch, energy and

audible/inaudible contours, rhythm, melody, flatness and the like. But among

these features, it is observed that the fundamental frequency of an utterance

is very useful in detecting emotions. There is a very high inconsistency in

recognizing emotions between anger and happiness, two of the main important

emotions in the environment.2

Disadvantages

1. The existing

system is based on the frequency of the voice that not give the appropriate

result of emotion.

2. Their no such

method for checking whether the customer is satisfied or not

Proposed System

As mentioned in the existing system disadvantage, we are going to

implement the proposed system to overcome this. We are going to develop our

system that tracks the Emotion from the conversation for that we are using the

Tone analyzer, and for the second disadvantage, we are classifying the Detected

Emotion into the three categories: Anger, Joy and Neutral. This classification

is used for checking Employee Performance and the last 60% conversation is used

for checking customer satisfaction.

Advantages:

1. Helps manager

to analyze the working behaviour of all Employees

2. Helps manager

to check whether the Customer satisfied or not.

3. It helps the

Employee to check his own performance.

4. It helps the

manager to easily analyze the performance of the Employee.

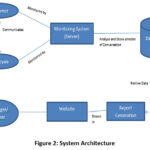

System Architecture

Customer and employee communicate with each other. This conversation

is monitored by the monitoring system. After storing this conversation scoring

is performed. Each message is assigned a point according to its emotion. In

this way, the conversation is analyzed and emotion and score of the

conversation are stored in the database. Taking all the above points into

consideration data is retrieved from database and report is generated. The

generated report is displayed to the manager or owner with the help of web

application.

Technique or Algorithm

used

A proposed system that we have implemented is mainly for checking

customer satisfaction and employee performance in the call center environment.

For this, we are using the two techniques to achieve these goals. These

Techniques are:

1. First, we are using the classification technique. In this when we

are giving conversion statements as input and detecting emotion. There is a

total of 14 types of emotions. So, classify it into 3 categories as shown in

the fig. This classification helps to detect only the required emotion.4

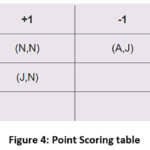

2. The second technique is to Point Scoring method. In this, we are

using the point table as shown in the above fig. By using this point table, we

are going to calculate the Employee Score for conversation. This score helps to

check the employee Performance. For customer satisfaction, we are monitoring

the last 60% conversion. Point Scoring table plays an important role here.5

Point Scoring table specifies the customer tone, if it equals

“Anger” than values is +2,if it is equals “Joy” than value

is +2 and lastly if it is equals “Neutral” than value is +1.

Modules

The emotion monitoring system for call center employees consists of

the following four modules:

- Emotion Detection

- Emotion Analysis

- Report Generation

- Database Manager & User Interface

Emotion Detection

Conversation performed between Customer and call center employee

(for our project it is considered as the call) is used for Emotion Detection.

Each statement of the conversation is separated as a message from the Customer

and response to it from Employee. Each statement Emotion is Detected by giving

this statement to the IBM Tone Analyzer. This performs the detection task and

gives the result for it. Suppose in any case there is no emotion or tone

dedicated then it goes for the Utterance Analysis. The result of it is the

emotion for that message. This is performed for the entire conversation.7

Emotion Analysis

In Emotion Analysis, the data from the previous is taken i.e. from

the Emotion Detection phase. Input is the conversion with the related emotion

to each message. This emotion detected is classified into three categories:

Anger, Neutral, and Joy. As shown in figure 2. After next is to calculate the

scoring point of Employee. This scored point calculated by using the table as

shown in fig 3. After this from the whole conversation, we are going to analyze

the last 60% conversion of Customers for checking Customer satisfaction.5

This module is responsible for sending information about a certain

utterance such as its corresponding emotion, Employee id, caller id and

timestamp of the utterance to the Database Manager module. This module is also

in charge of the analysis of the emotions of an agent. To produce better

analysis, this module computes the statistics of the emotions found in a

conversation.6

Results and Discussion

We presented an emotion monitoring system for call center agents

which has four modules for Emotion Detection, Emotion Analysis and Report

Generation, Database Management, and User Interface. The tone Analyzer is used

in detecting the emotions during the agent-client conversations. The three

emotions detected by the system are happiness, anger, and neutral. A

user-friendly interface with a professional look that can assist both agents

and supervisors in enhancing client satisfaction was constructed.

Future Enhancement

We are going to take the call recording as input instead of the

Android app. For that, we are using a speaker dissertation which separates call

recording into customer and Employee.

Acknowledgement

The authors acknowledge Walchand Institute of Technology for

providing us the opportunity to work in this research.

Funding

Nil

Conflict of Interest

Nil

References

- Princess Florianne O. Boco, Diana Karen B. Tercias and Kenneth R. Dela Cruz (2010). EMSys: An Emotion Monitoring System for Call Center Agents, University of the Philippines-Diliman,2010.

- C. Busso, S. Lee, S. Narayanan, Analysis of Emotionally Salient Aspects of Fundamental Frequency for Emotion Detection, IEEE Transactions on Audio, Speech, and Language Processing, Vol.17, No.4, pp.582-596, May 2009.

- L. Cen, W. Ser, Z. Yu, Speech Emotion Recognition Using Canonical Correlation Analysis and Probabilistic Neural Network, Seventh International Conference on Machine Learning and Applications, pp.859-862, 2008.

- Asim Smailagic, Daniel Siewiorek, Alex Rudnicky (2013). Emotion Recognition Modulating the Behavior of Intelligent Systems,2013 IEEE International Symposium on Multimedia, pp.378-383,2013.

- L. Devillers, L. Lamel, I. Vasilescu, Emotion Detection in Task-oriented Spoken Dialogues, IEEE International Conference on Multimedia and Expo, pp. 549-552, 2003.

- C.M. Lee, S., Narayanan, Toward Detecting Emotions in Spoken Dialogs, IEEE Transactions on Audio, Speech, and Language Processing, Vol.13, No.2,pp.293-302, March 2005.

- D. Morrison, R. Wang, L. De Silva, W.L. Xu, Real-time Spoken Affect Classification and its Application in Call-Centers, Third International Conference on Information Technology and Applications, 2005.

This work is licensed under a Creative Commons Attribution 4.0 International License.